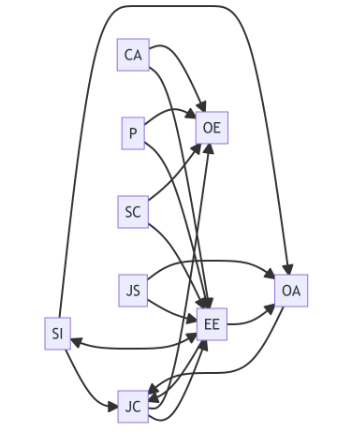

I’m working on a manuscript on the topic of selecting the correct research method and design to answer research questions, and I wanted to share an example of a study that went sideways. Ambrose (2022) posited five research questions that focused on the relationship between nine variables. To graphically depict the research questions, see this figure:

It looks convoluted to me! What’s more convoluted is that the emerging scholar chose a qualitative research method to answer these relationship questions. How much time would a researcher have to spend to understand these relationships? Perhaps one or two in a study, but nine?

Not only that, but the author created a survey instrument with combined closed- and open-ended questions. After obtaining a sample of 197 (anonymously), the emerging scholar reported descriptive statistics on the closed-ended ‘questions’ by group (contract, part-time, full-time) and opined on the differences. No statistical tests; just observations.

I’m surprised the faculty advising this student didn’t know better. I converted the responses to follow a quantitative research design, performed a series of Chi-square tests, and found something significant. I guess what I’ve done is not provide evidence for the emerging scholar’s study; I just found evidence that human observation can be incorrect.

Reference:

Ambrose, M. K. T. (2022). Examining organizational embeddedness of full-time, part-time, and contract workers within the values of job satisfaction, pay, supervisory relationships, and promotion [Doctoral dissertation]. ProQuest Dissertations & Theses Global: The Humanities and Social Sciences Collection (299956545)